Audience Testing Matrix

What is an audience testing matrix

An audience testing matrix is a systematic design that optimizes your organization's testing strategies. It can improve the quality of each test and increase the rate at which you can develop, execute, and scale tests.

They are premade groupings of your audience that allow for business-level analysis of different tests beyond first-order KPIs that are normally analyzed. At the most basic level, they are little more than pre-defined buckets your users fall into.

The why and how

By developing an audience testing matrix, organizations can more effectively test out new features, web designs, and marketing strategies while controlling for audience variance.

Most testing matrices will consist of breaking your entire audience into separate groups like Long Term Holdout Groups(LTHGs), Production Environments/Control Groups, Testing Environments/Test Groups, and overlapping Test Groups/micro test groups.

This work applies to most business units where specific users are known like accounts with logins, most DTC communication systems, and mobile app users. These matrices have some limitations when working with unknown audiences like Facebook ads and first-time website visits.

In our example, we will work through how a theoretical DTC communications team would utilize a testing matrix.

Overview

An audience test matrix generally aims to create predefined randomized groups, allowing teams to execute tests in a controlled environment.

Specifically, we will be able to:

Measure the overall lift of different tests and how they affect each other.

Ensure that when work is executed, we know exactly who received what in case errors occur during execution.

Help to better calculate audience size and conversion rates for any developed pre-test models.

Ensure test and control groups have equal variation within themselves for test validity.

Allow for a better view at wholistic and downstream organizational affects the test may have on your audience.

Matrix Design

The matrix design will be based on randomized ID numbers. Most user tracking systems, including nearly all ESPs and CRMs, have a unique ID, user ID, or account ID number that acts as the primary identifier for a user. This ID number tends to be either a standard base 10 (0-9) number or a hexadecimal (0-f) number and is any number of digits long, usually seven or more. For simplification, we will group users by the last digit of a base 10 ID number going forward.

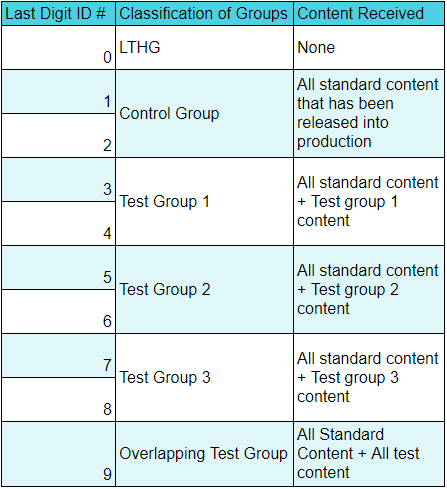

We then create a classification for each grouping and what content they will receive. We will use a Long Term Holdout Group (LTHG), Control Group, and various Test Groups. In our example, we group some ID numbers together. However, a more sophisticated system could see unique groups for each ID number.

The LTHG will receive no content throughout the time period. See below for details.

The Control Group receives standard content. This is all of the approved content you are testing against.

The three Test Groups all receive unique tests. This could be tests launched by different business units in the organization or multiple tests launched by one team. Note: These users should continue to receive all content that the Control Group does, except what is being specifically tested against.

The Overlapping Test Group receives all tests and any standard content not being tested against. This is to prevent cannibalism of your tests. We do not want to launch multiple tests only to learn later that they negatively affect one another.

A Note on the Long Term Holdout Group.

The actual implementation of an LTHG can be nuanced and is very dependent on the organization. It is, however, very important to have. For example, if a marketing team has an onboarding email series that deploys for all new users and we see that 50% of all users who receive it convert, that would be incredible, but if we saw that same segment of users who did not receive it still convert at 45%, then we would not feel it is as good. In fact, we may find that the cost of running the series is actually greater than the value produced. More discussion on LTGHs is in the related information section below.

Group rotations and variations

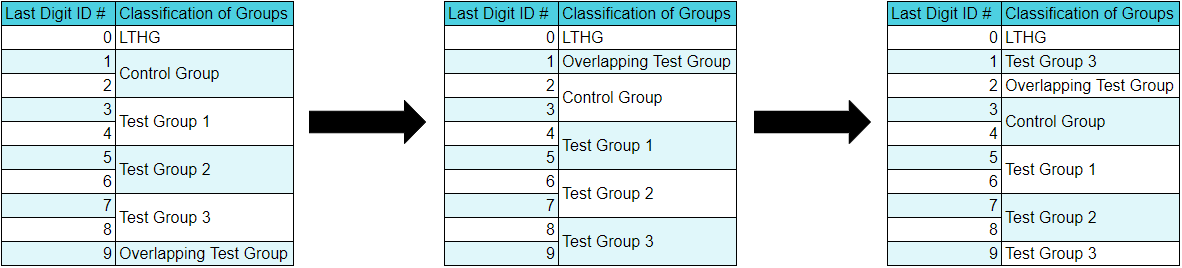

It is inevitable that some consumers will receive “testing fatigue.” Tests are meant to be bold, and although this will sometimes be the key to reaching our goals, sometimes they will fail. We want to ensure that users do not always receive the same tests and become frustrated. To account for this fact, it is best to rotate the groupings 2-4 times per year to keep audiences fresh.

You may note that we are not changing the LTHG. To understand the ROI of the work as a whole, you want to keep some consistency throughout the year. We recommend changing the LTHG yearly. We also left ID# 0 the same to show that not all groups need to rotate. You may find that some tests may need to run for longer.

Changing these groups around is not just to help reduce testing fatigue. It also serves the purpose of keeping teams on track. Accurately tracking organizational goals can be difficult. By having consistent reset periods where a test needs to be completed, you allow teams to report exactly how far along they are and make informed decisions on what to do next.

One way to implement this might be the first month of each quarter is when a team ends all active tests to make a decision on whether or not to push them to production and decide on/develop the next quarter’s tests. Those tests then run for two months, and the process repeats.

Group Variations

The example above is only one way to set up a testing matrix. Your organization may have enough users to develop a more complex system; you may have so few users that you can only realistically run one test at a time. We used an example with some complexity but one that was still relatively easy to follow.

Downstream analysis

Most testing systems give you a strong view of the first-order KPIs for your test. These would be metrics such as clicks, views, opens, form fills, etc. However, they rarely have a good view of the full breadth of your organization. This is where testing based on ID# becomes more impactful. By easily isolating groups in any of your business units by the same ID# that they are isolated in your tests, you can more accurately observe possible effects that a test has on your organization.

For example, when testing a new piece of informative content sent out, you may see changes in the following:

The number of complaints users send to your support team.

The % of users who make it through the purchase funnel.

The overall satisfaction rating users give.

Word of mouth and referral sign-ups.

Execution and expectations

To optimize testing in parallel, here are some helpful tips.

Ensure you are testing on different points in your user journeys. These are not multivariate tests but tests examining different parts of your business. For example, Group 1 is an onboarding test, Group 2 is a post-conversion event test, and Group 3 is a churned-user test.

Keep similar tests in similar groups. This way, as you rotate the group around, the types of tests will rotate with them.

Ensure groups are the correct size to run your tests in the desired timeframe. There is nothing wrong with combining two groups for testing.

Never to have an empty test group. Even if you are testing something small like button color or hero images, always test something.

Remember, small incremental steps add up over time; not every test needs to be a game-changer.

Consider the specific goal each test is meant to accomplish. It may not be worth it if it isn’t tied to at least one goal.

Always run a pre-test analysis before development. Read more about Pre-Test Analysis.

How do we build a functioning testing matrix? Every CRM and ESP will need a unique execution strategy, but here are some consistent strategies to implement.

Create a specific tag/custom attribute/segment for each ID#. By doing this, whenever you set up a new test or control, you can filter by “has this tag/attribute/in this segment.”

Add Time and Group identifiers to the names of any campaigns or tests run for easy tracking. With this, most systems will allow you to always search for Q2_2023 and find any campaigns that ran then. Or TestGroup3 and find all tests run under that Group ID.

Keep consistent with which team members, or teams in general, work in which groups.

Create an SOP for how a segment or campaign should be set up. This will reduce errors and allow managers to review the work before launch to more easily catch mistakes.

Regularly run small audits on the systems you implement to ensure accuracy.

Related Information

LTHG

The Long Term Holdout Group is one of the most controversial aspects of a testing matrix. There will be many people who do not like the idea of users not receiving any content. That is a fair concern to have. If, for example, you see a 10% drop in conversions for the LTHG, you may want to eliminate it, as that would net a 1% drop overall. We stand by always having some amount of your users in a true LTGH, but if 10% (as in our example) is too much, then try 1% of the audience. A 10% drop in 1% of your audience would account for only a .1% drop overall. The size can continue to shrink as much as needed.

The LTHG is also a crucial learning for audience segments. If you are only looking at improving a specific content stream, you may be missing the that this content stream actually performs poorly for a subsection of your audience, and a new strategy should be developed.

Multivariate Testing

Ideally, to run a multivariate test, you would run it within the specific test group. For example, if you want to run four versions of your test in one test group, you can break that group into four individual sections. Looking at our original example, Test Group 1 was in ID # 1 and 2. We could then split that audience again by looking at the last two digits, so #01-#41 would be A, #51-#91 would be B, #02-#42 C, and #52-#92 D. There is no need for you to break it down for control as you still have a separate group running as the primary control.

Qualitative testing validation

One common problem when developing less quantitative tests is proving the method is consistently valid.

In other words, if your copy team comes to you and says they want to utilize “happier” or “more friendly” language in content, it can be difficult to know if that is a good idea. Even if you can prove a statistically significant test, all you have learned is that one specific copy variant was better than another. Not that the change in tone is overall superior. To prove the validation of changes in tone, imagery, or colors. Check for repeatability within variation. For example, take a specific test group, break it down into ten subsections, and test five variants of the old tone and five variants of the new tone.

AI-generated content tests

As more and more organizations move to AI-generated content, it is important to observe the net effect that has throughout your organization. Perhaps a single piece of media will not see a hit in engagement, but by connecting these tests to the rest of your business units, you can better observe any unexpected changes to user behavior. Developing a Long Term AI Group may have several advantages. Read more about AI Testing.